Ubiquitous Computing Laboratory

The core mission of UCL is to build technologies that will enable computing devices to become pervasive and useful to our society. Research projects in UCL include AI for edge devices (tinyML), multimodal learning (vision, speech, text, point cloud), synthetic people, human-computer interfaces, autonomous robots and IoT protocols.

Some of our recently completed projects and publications (2021 and 2022):

Scene Text Recognition with Permuted Autoregressive Sequence Models

by Darwin Bautista and Rowel Atienza

(to appear in European Conference on Computer Vision (ECCV) 2022)

Preprint: https://arxiv.org/abs/2207.06966

Github: https://github.com/baudm/parseq

HuggingFace Live Demo: https://huggingface.co/spaces/baudm/PARSeq-OCR

PARSeq: Scene Text Recognition (STR) with Permuted Autoregressive Sequence Models merges the strengths of an attention-based STR model (eg ViTSTR, also developed in UP) and a language model to produce state-of-the-art results in reading text like product labels, signboards, road signs, car plates and paper bills. PARSeq achieves state-of-the-art (SOTA) results in STR benchmarks (91.9% accuracy) and more challenging datasets. It establishes new SOTA results (96.0% accuracy) when trained on real data. PARSeq is optimal on accuracy vs parameter count, FLOPS, and latency because of its simple, unified structure and parallel token processing. Due to its extensive use of attention, it is robust on arbitrarily-oriented text which is common in real-world images.

![are omitted due 1 space constraints. [B], [E], and [P) stand for beginning-of-sequence are omitted due 1 space constraints. [B], [E], and [P) stand for beginning-of-sequence](https://eee.upd.edu.ph/wp-content/uploads/elementor/thumbs/are-omitted-due-1-space-constraints.-B-E-and-P-stand-for-beginning-of-sequence-pvsj4u8xyfemnbk0s4s4yiiz3k46eq5jyeg2btz5lu.png)

Depth Pruning with Auxiliary Networks for tinyML

IEEE ICASSP 2022

by Josen De Leon (UP and Samsung R&D PH) and Rowel Atienza

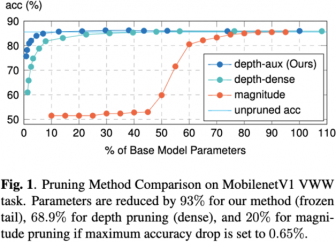

We developed a technique that significantly reduces the footprint and latency of deep learning models by removing the model head and replacing it with an efficient auxiliary network. On the Visual Wake Word dataset (VWW or person detection dataset), we found out that we can reduce the number of parameters by up to 93% while reducing the accuracy by only 0.65%. When deployed on ARM Cortex-M0, the MobileNet-V1 footprint is reduced from 336KB to 71KB and the latency from 904ms to 551ms while counter-intuitively increasing the accuracy by 1%.

Improving Model Generalization by Agreement of Learned Representations from Data Augmentation

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) 2022.

by Rowel Atienza

GitHub: https://github.com/roatienza/agmax

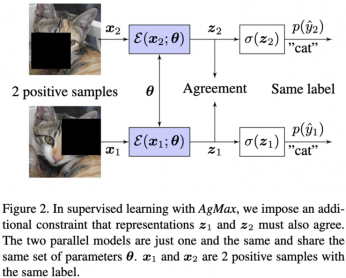

If data augmentation improves model performance, why not use two different data augmentations on a given input and require that the model agree on the output. This simple idea further improves the model performance on recognition, segmentation and detection. We call our method Agreement Maximization or AgMax.

Vision Transformer for Fast and Efficient Scene Text Recognition

International Conference on Document Analysis and Recognition (ICDAR) 2021. Link: ArXiv and Springer Nature

by Rowel Atienza

GitHub: https://github.com/roatienza/deep-text-recognition-benchmark

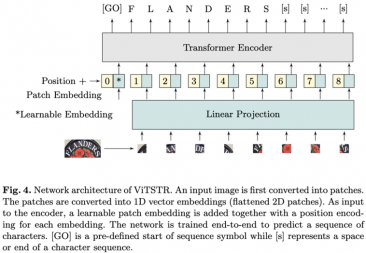

Scene text recognition (STR) enables computers to read text in the natural human environment such as billboards, road signs, product labels and paper bills. STR is more challenging compared to the more structured problem of OCR. State of the art (SOTA) STR models are complex (made of 3 stages) and slow. Using a vision transformer, we built a fast, efficient and robust single stage STR model with comparable performance to SOTA. We call our model ViTSTR.

Gaze on Objects

IEEE/CVF Computer Vision and Pattern Recognition (CVPR) Workshops 2021.

by Henri Tomas, Marcus Reyes, Raimarc Dionido, Mark Ty, Jonric Mirando, Joel Casimiro, Rowel Atienza and Richard Guinto (Samsung R&D PH)

GitHub: https://github.com/upeee/GOO-GAZE2021

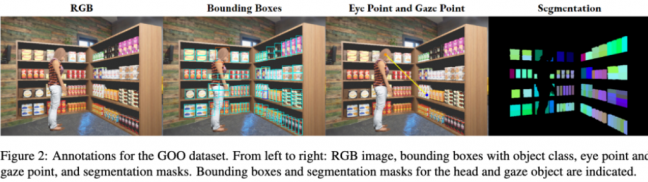

Gaze signifies a person’s focus of attention. However, there is a lack of dataset that can be used to explicitly train models using gaze and the object of attention. In Gaze on Objects (GOO), we built a dataset of both synthetic and real people looking at objects. Our dataset is publicly available on github.