Electrical and Electronics Engineering Institute

Recent News

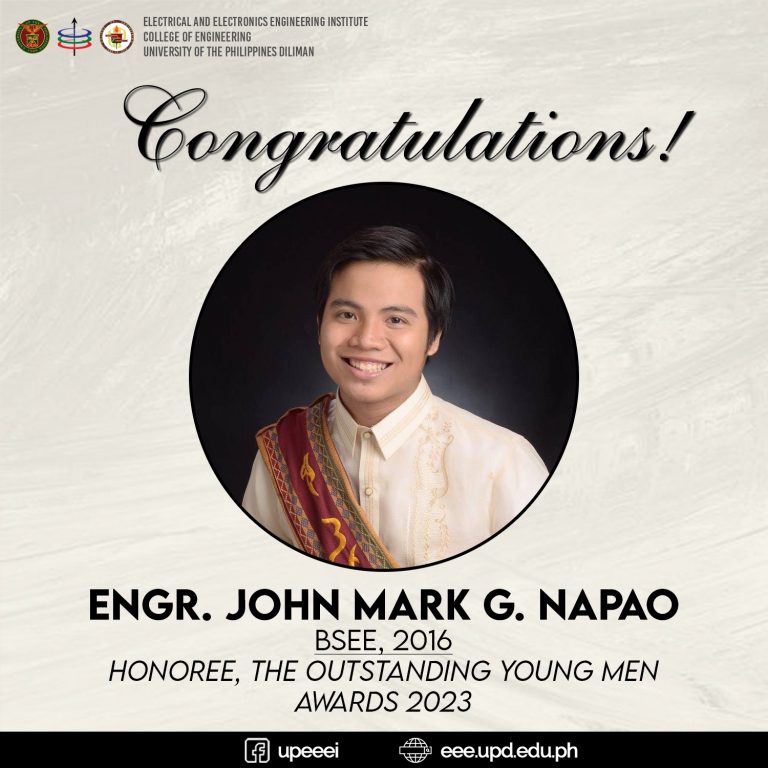

JCI Philippines’ The Outstanding Young Men (TOYM) Awards 2023 recently honored EEEI alumnus Engr. John Mark G. Napao (BSEE, 2016). He is recognized for his contributions to sustainable energy through his organization, SOLAR Hope.

UP Diliman (UPD) and the Department of Science and Technology Advanced Science and Technology Institute (DOST-ASTI) recently signed a three-year memorandum of understanding (MOU).

The University of the Philippines (UP) took part in two signings of memoranda at the HES Auditorium in the National Electrification Administration Building, Quezon City, on January 18, 2024.

Information on EEEI Undergraduate and Graduate Programs, Admission Policies, Registration Procedures, Academic Rules, etc

Read about EEEI’s commitment to serving the larger community through various works and collaborations.

Graduate Program Application

Applications for 1st Semester AY 2024-2025 is now open until June 30, 2024 only.

Student Organizations

List of active student organizations inside the EEEI.

EEEInfo

Portal of student services with lots of useful information for current EEEI students.

Downloads

Downloadable forms for students, faculty, and staff.

Support Services

Eduroam, VPN, EEE Account, etc.

VIDEOS

EEE graduating students attend the hybrid seminar “what’s nEEExt?” yesterday, 29 June 2023 at VLC, EEE building and via Zoom. The seminar invited EEE alumni who talked about their experiences after graduation and their current work in various fields

Get to know each EEE laboratory, their faculty members and requirements through this laboratory information session held last 1 March 2023.